用深度學習神經網路製作自動編碼解碼器

資料來源:https://medium.com/datadriveninvestor/deep-autoencoder-using-keras-b77cd3e8be95在這個案例中,我們將製作一個自動編碼器,他可以將圖片利用深度神經網路編碼成維度較小的資料,但這個維度較小的資料卻仍然保有原來資料的重要資訊,這些壓縮過的資料可以再經由解碼器還原成原來的資訊. 實作上我們將編碼器(encoder)接上解碼器(decoder)做訓練,編碼器:輸入資料為784維度的圖片資料,利用密集層(Dense layer)壓縮成32維度;解碼器:將32維度的資料利用密集層(Dense layer)展開成32維度.在訓練時,將編碼器和解碼器接在一起訓練,訓練時輸入資料為圖片28*28得陣列reshape成784維度的資料,輸出資料則和輸入資料相同,目的是為了訓練機器能夠在壓縮和展開資料時盡可能減少資料的損失.

這個案例中我們將實作mnist灰階數字圖片的編碼與解碼

from keras.datasets import mnist

from keras.layers import Input, Dense

from keras.models import Model

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

%matplotlib inline

#載入資料

(X_train, _), (X_test, _) = mnist.load_data()

X_train = X_train.astype('float32')/255

X_test = X_test.astype('float32')/255

X_train = X_train.reshape(len(X_train), np.prod(X_train.shape[1:]))

X_test = X_test.reshape(len(X_test), np.prod(X_test.shape[1:]))

print(X_train.shape)

print(X_test.shape)

(60000, 784) (10000, 784)

# 輸入資料(輸入的資料為[0,1]之間的數字,維度是28*28=784維度的一維陣列(784,))

input_img= Input(shape=(784,))

#編碼器(都使用relu函數做非線性轉換)

encoded = Dense(units=128, activation='relu')(input_img)

encoded = Dense(units=64, activation='relu')(encoded)

encoded = Dense(units=32, activation='relu')(encoded)

#解碼器(輸出層使用sigmoid非線性函數將資料壓縮在[0,1]之間)

decoded = Dense(units=64, activation='relu')(encoded)

decoded = Dense(units=128, activation='relu')(decoded)

decoded = Dense(units=784, activation='sigmoid')(decoded)

#設定自動編碼器(編碼器+解碼器)的輸入與輸出

autoencoder=Model(input_img, decoded)

#設定編碼器的輸入與輸出

encoder = Model(input_img, encoded)

#編碼器摘要

autoencoder.summary()

_________________________________________________________________ Layer (type) Output Shape Param # ================================================================= input_1 (InputLayer) (None, 784) 0 _________________________________________________________________ dense_1 (Dense) (None, 128) 100480 _________________________________________________________________ dense_2 (Dense) (None, 64) 8256 _________________________________________________________________ dense_3 (Dense) (None, 32) 2080 _________________________________________________________________ dense_4 (Dense) (None, 64) 2112 _________________________________________________________________ dense_5 (Dense) (None, 128) 8320 _________________________________________________________________ dense_6 (Dense) (None, 784) 101136 ================================================================= Total params: 222,384 Trainable params: 222,384 Non-trainable params: 0 _________________________________________________________________

# 設定編碼器的訓練方法(optimizer, loss, metrics) autoencoder.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

# 訓練編碼器(輸入=輸出)

autoencoder.fit(X_train, X_train,

epochs=50,

batch_size=256,

shuffle=True,

validation_data=(X_test, X_test))

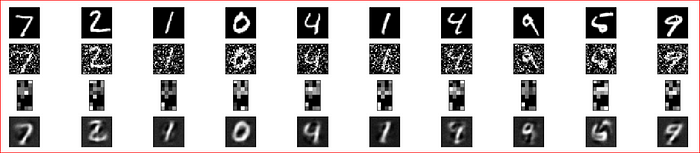

驗證編碼器是否具備去除圖片雜訊的功能

#loading only images and not their labels (X_train, _), (X_test, _) = mnist.load_data() X_train = X_train.astype('float32')/255 X_test = X_test.astype('float32')/255 X_train = X_train.reshape(len(X_train), np.prod(X_train.shape[1:])) X_test = X_test.reshape(len(X_test), np.prod(X_test.shape[1:])) #製作雜訊 X_train_noisy = X_train + np.random.normal(loc=0.0, scale=0.5, size=X_train.shape) X_train_noisy = np.clip(X_train_noisy, 0., 1.) X_test_noisy = X_test + np.random.normal(loc=0.0, scale=0.5, size=X_test.shape) X_test_noisy = np.clip(X_test_noisy, 0., 1.) print(X_train_noisy.shape) print(X_test_noisy.shape)(60000, 784) (10000, 784)plt.imshow(X_train_noisy[0].reshape(28,28))

#Input image input_img= Input(shape=(784,)) # encoded and decoded layer for the autoencoder encoded = Dense(units=128, activation='relu')(input_img) encoded = Dense(units=64, activation='relu')(encoded) encoded = Dense(units=32, activation='relu')(encoded) decoded = Dense(units=64, activation='relu')(encoded) decoded = Dense(units=128, activation='relu')(decoded) decoded = Dense(units=784, activation='sigmoid')(decoded) # Building autoencoder autoencoder=Model(input_img, decoded) #extracting encoder encoder = Model(input_img, encoded) # compiling the autoencoder autoencoder.compile(optimizer='adadelta', loss='binary_crossentropy', metrics=['accuracy'])

# Fitting the noise trained data to the autoencoder

autoencoder.fit(X_train_noisy, X_train_noisy,

epochs=100,

batch_size=256,

shuffle=True,

validation_data=(X_test_noisy, X_test_noisy))

# reconstructing the image from autoencoder and encoder encoded_imgs = encoder.predict(X_test_noisy) predicted = autoencoder.predict(X_test_noisy)

# plotting the noised image, encoded image and the reconstructed image

plt.figure(figsize=(40, 4))

for i in range(10):

# display original images

ax = plt.subplot(4, 20, i + 1)

plt.imshow(X_test[i].reshape(28, 28))

plt.gray()

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

# display noised images

ax = plt.subplot(4, 20, i + 1+20)

plt.imshow(X_test_noisy[i].reshape(28, 28))

plt.gray()

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

# display encoded images

ax = plt.subplot(4, 20, 2*20+i + 1 )

plt.imshow(encoded_imgs[i].reshape(8,4))

plt.gray()

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

# display reconstruction images

ax = plt.subplot(4, 20, 3*20 +i+ 1)

plt.imshow(predicted[i].reshape(28, 28))

plt.gray()

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

plt.show()

編碼器的確可以把雜訊過濾掉僅留下重要的資訊